Introducing VerifyML

An open-source governance framework to build responsible AI created as part of the MAS Veritas initiative

Cylynx

October 19, 2021 · 5 min read

An open-source governance framework to build responsible AI created as part of the MAS Veritas initiative

Cylynx

October 19, 2021 · 5 min read

Recently, we participated in the Global Veritas Challenge, a competition held by the Monetary Authority of Singapore (MAS) that

… seeks to accelerate the development of solutions which validate artificial intelligence and data analytics (AIDA) solutions against the fairness, ethics, accountability and transparency (FEAT) principles, to strengthen trust and promote greater adoption of AI solutions in the financial sector.

This post describes our solution to the Challenge: VerifyML.

Note 1: In this post, the term “bias” refers to the concept of injustice / unfairness, instead of bias in the statistical sense. Where it refers to the latter, it will be explicitly stated.

Note 2: Although FEAT refers to 4 different concepts, this Challenge’s main focus is on fairness.

To understand the existing AI fairness landscape, we consulted research papers, interviewed experts, and surveyed existing tools in this space. Through this, we found that current solutions tended towards one of two approaches to tackle bias in machine learning (ML) models:

Both approaches have their merits, but there are limitations to be considered as well:

Hence, we sought to create a framework that enables a holistic approach to building ML models — one that considers social/business contexts, operations, and model performance collectively. We felt that such a solution would combine the advantages described above, and align more closely with the spirit of MAS’ FEAT principles.

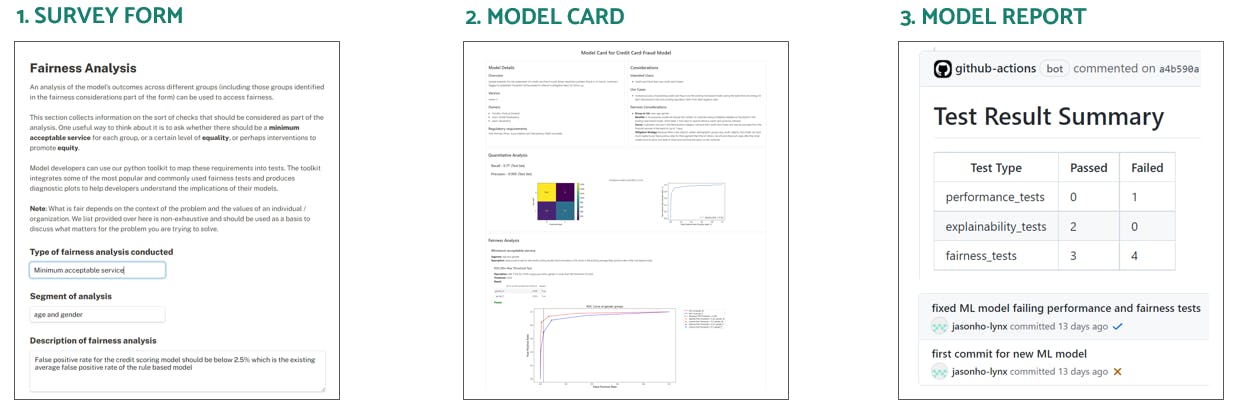

Thus was born VerifyML — an open-source governance framework to build reliable and fair ML models. It consists of three parts:

The first part is a web form to gather inputs and align stakeholders across product, data science, and compliance about model requirements. It enables comprehensive model assessments throughout the development process to keep everyone on the same page, and the answers can be continually refined to suit an organisation’s needs. Try it out with our demo web form and you can receive a copy of the responses in JSON format via email.

Adapted from Google’s Model Card Toolkit, this is the source of truth for all information relating to a particular model. Using our Python library, a Model Card can be bootstrapped using the survey form response received via email, or created from scratch.

Rather than comparing across different metric measures aimlessly, we believe that fairness evaluation should be done with a more structured approach with guidance from an ethics committee. One approach which we find practical and effective is to identify groups at risk and ask whether any of the following definitions of fairness should be applied to them:

Model tests can then be added to evaluate if a model meets various performance, explainability, and fairness criteria. Currently, VerifyML provides 5 types of tests:

These provide a way check for unintended biases or model drift over time. Given the variety, the choice of tests would differ from project to project, since it heavily depends on an organisation’s overall objectives (as previously defined in the survey form).

After a Model Card is created, the information is stored in a protobuf file, which can be exported into a HTML / Markdown file as a business report.

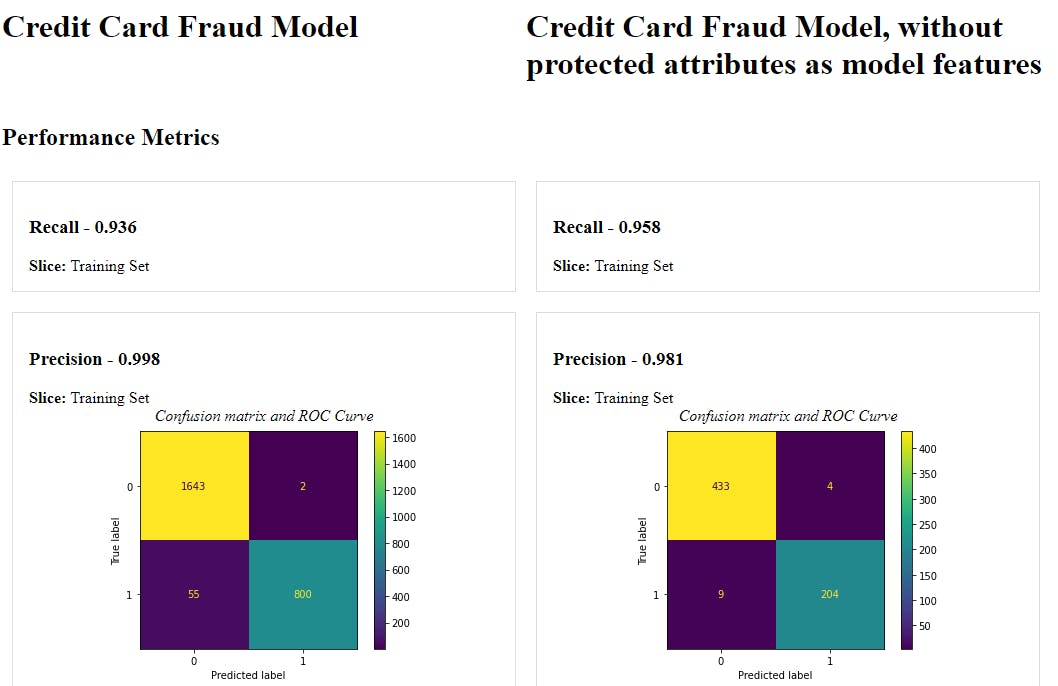

In addition, a model card can be compared against another side-by-side, enabling easy tradeoff comparison. For example, we might check how a model that uses protected attributes performs against an equivalent model that doesn’t (i.e. fairness through unawareness):

A tradeoff comparison between a model that uses protected attributes, against another that does not

With this information, we can make better decisions about which model to use — does the use case warrant higher recall? What are the business / human costs of using a model that has lower precision? Does it pass the fairness model tests? Thinking through and documenting answers to such questions helps an organisation reach informed and justifiable conclusions, regardless of whether protected attributes are used.

A Model Report contains automated documentation and alerts from model test results generated via Github Actions. Like a typical unit testing CI/CD workflow, it can be set to validate a Model Card upon every Github commit, reporting a summary of the test results to all users in the repository. This provides foundation for a good development workflow, since everyone can easily identify when a model is production-ready, and when it isn’t.

To get an idea of how the workflow described above might look in practice, take a look at our example notebooks here.

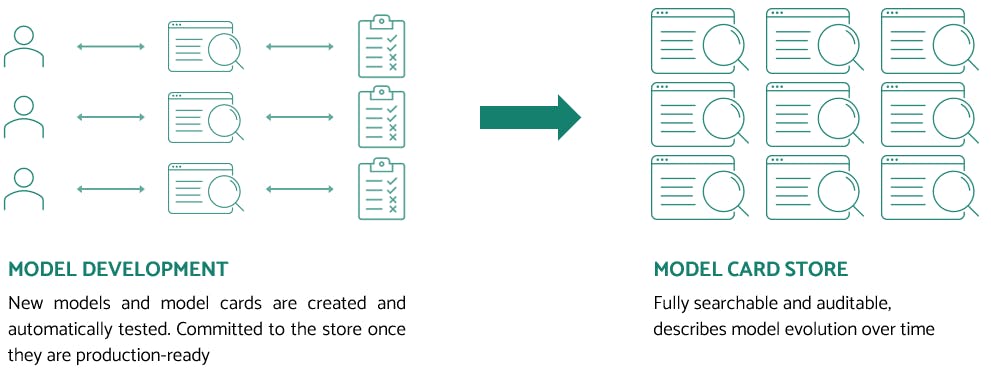

When implemented at scale, we imagine the core of VerifyML to be an easily searchable central Model Card store that contains full details of an organisation’s models (including third-party ones). Every time a new Model Card is developed, model tests are run automatically via separate workers, and all tests should pass before a card is committed to the store.

Current fairness solutions can be effective in the right context, but using them individually is insufficient for preventing bias in machine learning. VerifyML addresses this problem by merging them together and taking a more comprehensive approach to model development, allowing the strengths of each to overcome the limitations of another.

With its 3 components, VerifyML is a toolkit for teams to document findings and evolve along a model’s development lifecycle. It improves model reliability, reduces unintended biases, and provides safeguards in a model deployment process, helping its users work towards the goal of fairness through awareness.

Try it out by downloading the package, pip install verifyml and going through the examples in Github. Feel free to reach out through the Github repository for technical questions or through our contact-us page if you would like to collaborate with us on proof of concepts.